Want to deploy a machine learning model but don’t know where to start? This guide walks you through everything, from creating your Azure workspace to having a live API that predicts diabetes risk. We’ll build an automated ML pipeline that trains a RandomForest classifier on the diabetes dataset, then deploy the best model as a production-ready REST API. No shortcuts, no assumptions, every single step explained!

By the end of this guide, you’ll have:

- Azure ML workspace configured

- Automated training pipeline (data → preprocessing → modeling)

- Multiple models trained and compared

- Best model deployed as REST API

- Working predictions via HTTP requests

What You’ll Need

- Azure account

- Basic Python knowledge

- 2-3 hours of time

- The diabetes.csv dataset (I’ll show you where to get it)

Part 1: Creating Your Azure ML Workspace

The workspace is your ML project’s home, it contains all your data, models, experiments, and deployments.

Create Azure Machine Learning Workspace

Now let’s create the ML workspace:

- In search bar, type “Machine Learning”

- Click “Machine Learning”

- Click “+ Create” → “New workspace”

Basics tab:

- Subscription: Your subscription

- Resource group: Select

your-resource-group - Workspace name:

diabetes-ml-workspace(must be globally unique) - Region: Same as resource group (e.g.,

East US) - Storage account: Leave as “Create new” (will auto-generate)

- Key vault: Leave as “Create new”

- Application insights: Leave as “Create new”

- Container registry: Select “None” (we’ll create when needed)

- Click “Review + Create”

- Review the summary

- Click “Create”

What’s being created:

- ML Workspace (your main workspace)

- Storage Account (stores datasets, models, logs)

- Key Vault (stores secrets, keys)

- Application Insights (monitoring and logging)

Wait for deployment (2-3 minutes)

You’ll see:

Deployment in progress...

├── Storage account ✓

├── Key vault ✓

├── Application Insights ✓

└── Machine Learning workspace ✓

Deployment complete!

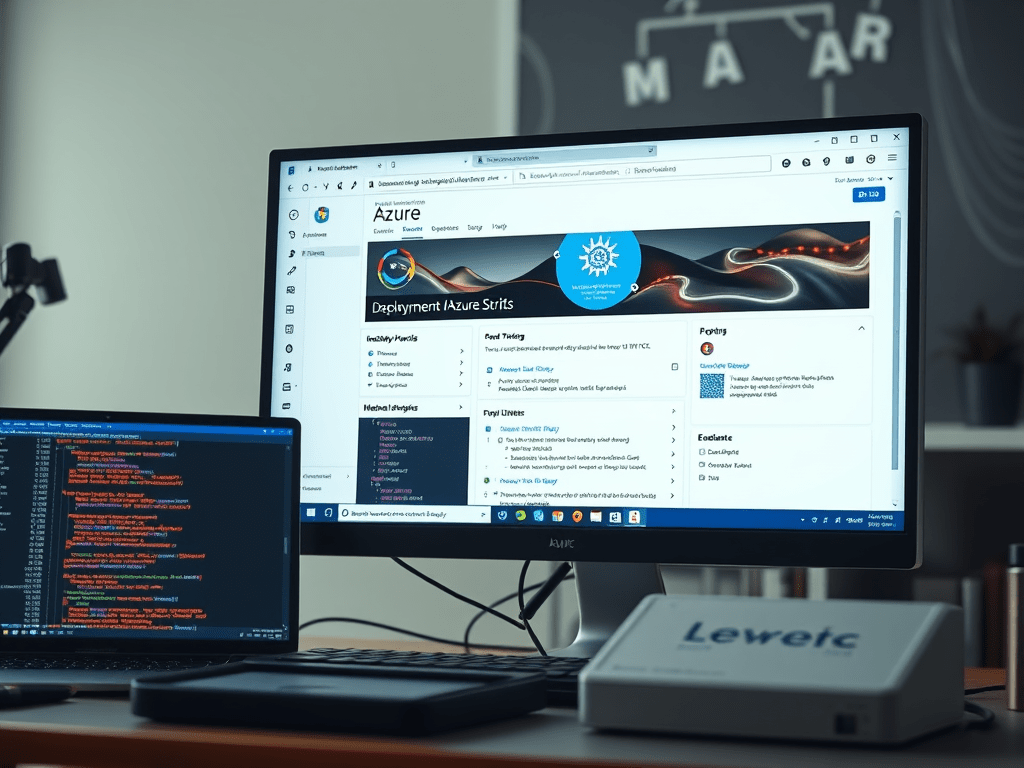

Access Azure ML Studio

- Click “Go to resource”

- You’ll see your workspace overview page

- Click “Launch studio” button (big blue button)

- You’ll be redirected to ml.azure.com

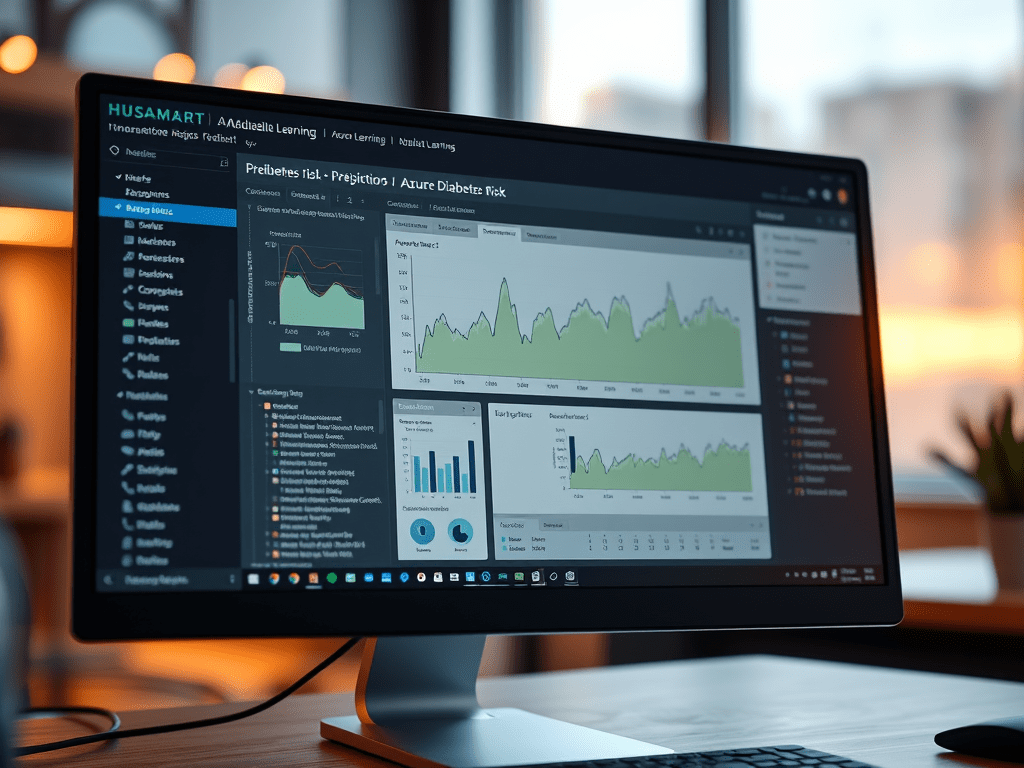

Azure ML Studio is the web interface where you’ll do all your ML work.

Explore the interface:

- Left sidebar: Main navigation

- Notebooks (Jupyter notebooks)

- Automated ML (no-code ML)

- Designer (drag-and-drop ML)

- Data (datasets and datastores)

- Compute (computing resources)

- Models (registered models)

- Endpoints (deployed models)

- Experiments (training runs)

- Pipelines (automated workflows)

Part 2: Creating a Compute Instance (Your Cloud Computer)

A compute instance is a cloud-based virtual machine where you’ll run Jupyter notebooks.

Step 2.1: Create Compute Instance

- In Azure ML Studio, click “Compute” (left sidebar)

- Click “Compute instances” tab

- Click “+ New”

Configure:

- Compute name:

notebook-vm(lowercase, no spaces) - Virtual machine type: CPU

- Virtual machine size:

- Click “Select from all options”

- Search:

Standard_DS3_v2 - Specs: 4 cores, 14 GB RAM

- Click “Select”

Advanced Settings:

- Enable SSH access: No

- Enable idle shutdown: Yes (shutdown after 30 min of inactivity)

- Click “Create”

Wait 3-5 minutes for creation.

Status will show:

Creating → Running

Important: This VM costs ~$0.27/hour when running. It auto-stops after 30 min idle, but you should manually stop it when done working!

Step 2.2: Access Jupyter Notebooks

Once your compute instance is running:

- In the compute instances list, find

notebook-vm - Click “Jupyter” link (under Application URI)

- A new tab opens with Jupyter Lab interface

You’re now in your cloud development environment!

Part 3: Getting the Dataset

Step 3.1: Download Diabetes Dataset

In your Jupyter Lab interface:

- Click “+” (new launcher)

- Click “Terminal” (under Other)

In the terminal, run:

# Download diabetes dataset

wget https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv -O diabetes.csv

# Or if wget doesn't work, use curl:

curl https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv -o diabetes.csv

Verify download:

ls -lh diabetes.csv

head diabetes.csv

You should see:

6,148,72,35,0,33.6,0.627,50,1

1,85,66,29,0,26.6,0.351,31,0

...

Step 3.2: Add Column Headers

The dataset doesn’t have headers. Let’s add them:

- In Jupyter, click “+” → “Python 3” (creates new notebook)

- Copy and paste this code:

import pandas as pd

# Read CSV without headers

df = pd.read_csv('diabetes.csv', header=None)

# Add column names

df.columns = ['Pregnancies', 'Glucose', 'BloodPressure', 'SkinThickness',

'Insulin', 'BMI', 'DiabetesPedigreeFunction', 'Age', 'Outcome']

# Save with headers

df.to_csv('diabetes.csv', index=False)

print(" Headers added!")

print(f"Shape: {df.shape}")

print("\nFirst few rows:")

print(df.head())

- Click Run (▶ button) or press Shift+Enter

You should see:

Headers added!

Shape: (768, 9)

First few rows:

Pregnancies Glucose BloodPressure ...

0 6 148 72 ...

1 1 85 66 ...

- Save this notebook: File → Save Notebook → name it

01_prepare_data.ipynb

Step 3.3: Upload to Blob Storage

In the same notebook, add a new cell:

from azureml.core import Workspace, Datastore

# Get workspace (automatically connected in compute instance!)

ws = Workspace.from_config()

print(f" Connected to workspace: {ws.name}")

# Get default datastore

datastore = Datastore.get(ws, 'workspaceblobstore')

# Upload diabetes.csv

datastore.upload_files(

files=['./diabetes.csv'],

target_path='',

overwrite=True,

show_progress=True

)

print(" Dataset uploaded to Azure Blob Storage!")

Run this cell. You should see:

Connected to workspace: diabetes-ml-workspace

Uploading diabetes.csv: 100%

Dataset uploaded to Azure Blob Storage!

Part 4: Creating Compute Cluster for Training

Your compute instance is for notebooks. For training, we need a compute cluster that auto-scales.

Step 4.1: Create Compute Cluster

Create a new notebook cell:

from azureml.core import Workspace

from azureml.core.compute import ComputeTarget, AmlCompute

from azureml.core.compute_target import ComputeTargetException

# Get workspace

ws = Workspace.from_config()

# Compute configuration

compute_name = "diabetes-compute"

compute_config = AmlCompute.provisioning_configuration(

vm_size='STANDARD_DS11_V2',

min_nodes=0, # Scale to 0 when idle = $0 cost!

max_nodes=4,

idle_seconds_before_scaledown=120

)

# Create or get compute

try:

compute_target = ComputeTarget(workspace=ws, name=compute_name)

print(f" Found existing compute: {compute_name}")

except ComputeTargetException:

print(f"Creating new compute: {compute_name}...")

compute_target = ComputeTarget.create(ws, compute_name, compute_config)

compute_target.wait_for_completion(show_output=True)

print(" Compute cluster created!")

print(f"\nCompute details:")

print(f" Name: {compute_target.name}")

print(f" VM size: {compute_target.vm_size}")

# Get status information

# The status tells us if the cluster is ready and how many machines are running

status = compute_target.get_status()

# Provisioning state: Is the cluster ready? (Succeeded = yes, it's ready!)

print(f" Provisioning state: {status.provisioning_state}")

# Current node count: How many computers are running RIGHT NOW?

# Should be 0 when idle (that's good - means you're not being charged!)

print(f" Current node count: {status.current_node_count}")

# Target node count: How many computers should be running?

# This increases when a job starts, then goes back to 0 when done

print(f" Target node count: {status.target_node_count}")

# What these numbers mean:

# - If both counts are 0: Cluster is idle, costing you $0

# - If counts are 1-4: Cluster is running jobs, you're being charged

# - Provisioning state "Succeeded": Cluster is healthy and ready to use

Run this cell. Takes 2-3 minutes.

Part 5: Creating Pipeline Scripts

Now let’s create the three Python scripts for our pipeline.

Step 5.1: File Organization

In Jupyter, create a new folder:

- Click folder icon (left sidebar)

- Click “New Folder” button

- Right-click folder → Rename to

pipeline_scripts

Step 5.2: Create data_wrangling.py

- Click “+” → “Text File”

- Paste this code:

from azureml.core import Workspace, Dataset, Datastore, Run

import pandas as pd

import numpy as np

import os

import argparse

import warnings

warnings.filterwarnings('ignore')

# Parse arguments

parser = argparse.ArgumentParser()

parser.add_argument("--input-data", type=str)

args = parser.parse_args()

# Get run context (works in Azure pipeline)

run = Run.get_context()

ws = run.experiment.workspace

# Get datastore

datastore = Datastore.get(ws, 'workspaceblobstore')

# Load data

print(f"Loading: {args.input_data}")

df = Dataset.Tabular.from_delimited_files(

path=[(datastore, args.input_data)]

).to_pandas_dataframe()

print(f" Data loaded! Shape: {df.shape}")

print(f"Columns: {list(df.columns)}")

print(df.head())

print(df.describe())

# Save for next step

output_dir = "tmp"

os.makedirs(output_dir, exist_ok=True)

output_path = os.path.join(output_dir, "wranggled.csv")

df.to_csv(output_path, index=False)

# Upload

datastore.upload(src_dir=output_dir, target_path="", overwrite=True)

print(" Data wrangling complete!")

- File → Save As → name:

pipeline_scripts/data_wrangling.py

Step 5.3: Create preprocessing.py

- Click “+” → “Text File”

- Paste this code:

from azureml.core import Workspace, Dataset, Datastore, Run

import pandas as pd

import numpy as np

import os

import argparse

from sklearn.preprocessing import QuantileTransformer

import warnings

warnings.filterwarnings('ignore')

parser = argparse.ArgumentParser()

parser.add_argument("--prep", type=str)

args = parser.parse_args()

run = Run.get_context()

ws = run.experiment.workspace

datastore = Datastore.get(ws, 'workspaceblobstore')

# Load data

print(f"Loading: {args.prep}")

df = Dataset.Tabular.from_delimited_files(

path=[(datastore, args.prep)]

).to_pandas_dataframe()

print(f"Shape before: {df.shape}")

# Remove duplicates

df = df.drop_duplicates()

print(f"Shape after removing duplicates: {df.shape}")

# Handle missing values (0 = missing in this dataset)

df['Glucose'] = df['Glucose'].replace(0, df[df['Glucose'] != 0]['Glucose'].mean())

df['BloodPressure'] = df['BloodPressure'].replace(0, df[df['BloodPressure'] != 0]['BloodPressure'].mean())

df['SkinThickness'] = df['SkinThickness'].replace(0, df[df['SkinThickness'] != 0]['SkinThickness'].median())

df['Insulin'] = df['Insulin'].replace(0, df[df['Insulin'] != 0]['Insulin'].median())

df['BMI'] = df['BMI'].replace(0, df[df['BMI'] != 0]['BMI'].median())

print(" Missing values handled")

# Feature selection

df_selected = df[['Pregnancies', 'Glucose', 'SkinThickness', 'BMI', 'Age', 'Outcome']]

print(f"Selected features: {list(df_selected.columns)}")

# Normalize

quantile_transformer = QuantileTransformer()

X = quantile_transformer.fit_transform(df_selected)

df_normalized = pd.DataFrame(X, columns=df_selected.columns)

print(" Data normalized")

print(df_normalized.head())

# Save

output_dir = "tmp"

os.makedirs(output_dir, exist_ok=True)

output_path = os.path.join(output_dir, "preprocessed.csv")

df_normalized.to_csv(output_path, index=False)

datastore.upload(src_dir=output_dir, target_path="", overwrite=True)

print(" Preprocessing complete!")

- File → Save As →

pipeline_scripts/preprocessing.py

Step 5.4: Create modeling.py

- Click “+” → “Text File”

- Paste this code:

from azureml.core import Workspace, Dataset, Datastore, Run

import pandas as pd

import numpy as np

import os

import argparse

import math

import joblib

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, precision_score, recall_score, f1_score

import warnings

warnings.filterwarnings('ignore')

parser = argparse.ArgumentParser()

parser.add_argument("--train", type=str)

args = parser.parse_args()

run = Run.get_context()

ws = run.experiment.workspace

datastore = Datastore.get(ws, 'workspaceblobstore')

# Load data

print(f"Loading: {args.train}")

df = Dataset.Tabular.from_delimited_files(

path=[(datastore, args.train)]

).to_pandas_dataframe()

print(f" Data loaded! Shape: {df.shape}")

# Prepare data

X = df.drop('Outcome', axis=1)

y = df['Outcome']

X_train, X_test, y_train, y_test = train_test_split(

X, y, test_size=0.2, random_state=42, stratify=y

)

print(f"Training samples: {len(X_train)}")

print(f"Test samples: {len(X_test)}")

# Train models

n_estimators_list = [100, 200, 500]

for n_est in n_estimators_list:

print(f"\n{'='*60}")

print(f"Training model: n_estimators={n_est}")

print(f"{'='*60}")

model = RandomForestClassifier(

n_estimators=n_est,

random_state=42,

n_jobs=-1

)

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

# Calculate metrics

rmse = math.sqrt(mean_squared_error(y_test, y_pred))

precision = precision_score(y_test, y_pred)

recall = recall_score(y_test, y_pred)

f1 = f1_score(y_test, y_pred)

# Log to Azure ML

run.log("n_estimators", n_est)

run.log("rmse", rmse)

run.log("precision", precision)

run.log("recall", recall)

run.log("f1-score", f1)

print(f"RMSE: {rmse:.4f}")

print(f"Precision: {precision:.4f}")

print(f"Recall: {recall:.4f}")

print(f"F1-Score: {f1:.4f}")

# Save model

output_dir = "outputs"

os.makedirs(output_dir, exist_ok=True)

model_path = os.path.join(output_dir, f"model_estimator_{n_est}.pkl")

joblib.dump(model, model_path)

run.upload_file(name=f"model_estimator_{n_est}.pkl", path_or_stream=model_path)

print(f" Model saved")

# Save best model to datastore

tmp_dir = "tmp"

os.makedirs(tmp_dir, exist_ok=True)

import shutil

shutil.copy("outputs/model_estimator_500.pkl", os.path.join(tmp_dir, "model_estimator_500.pkl"))

datastore.upload(src_dir=tmp_dir, target_path="", overwrite=True)

run.complete()

print("\n Training complete!")

- File → Save As →

pipeline_scripts/modeling.py

Your folder structure now:

Users/

└── [your username]/

├── diabetes.csv

├── 01_prepare_data.ipynb

└── pipeline_scripts/

├── data_wrangling.py

├── preprocessing.py

└── modeling.py

Part 6: Running the Pipeline

Create a new notebook for running the pipeline.

Step 6.1: Create Pipeline Notebook

- Click “+” → “Python 3” notebook

- Name it:

02_run_pipeline.ipynb

Step 6.2: Import Libraries

First cell:

from azureml.core import Workspace, Experiment, Environment

from azureml.core.compute import ComputeTarget

from azureml.core.runconfig import RunConfiguration

from azureml.pipeline.steps import PythonScriptStep

from azureml.pipeline.core import Pipeline

print(" Libraries imported")

Step 6.3: Connect to Workspace

Second cell:

# Get workspace (automatic in compute instance)

ws = Workspace.from_config()

print(f" Connected to workspace: {ws.name}")

print(f" Resource group: {ws.resource_group}")

print(f" Location: {ws.location}")

Step 6.4: Get Compute

Third cell:

# Get the compute cluster we created earlier

compute_name = "diabetes-compute"

compute_target = ws.compute_targets[compute_name]

print(f" Using compute: {compute_name}")

# Check the status of our compute cluster

status = compute_target.get_status()

# Provisioning state tells us if the cluster is ready to use

# "Succeeded" = Everything is good, cluster is ready!

# "Creating" = Still setting up, wait a bit longer

# "Failed" = Something went wrong, check error messages

print(f" Provisioning state: {status.provisioning_state}")

# Current nodes = How many virtual machines are running RIGHT NOW

# 0 nodes = Cluster is sleeping, you're NOT being charged! 💰

# 1-4 nodes = Cluster is working on a job, you ARE being charged

print(f" Current nodes: {status.current_node_count}")

# Why this matters:

# When you submit a pipeline, current_node_count jumps from 0 to 1 (or more)

# When the pipeline finishes + 2 min idle, it drops back to 0

# At 0 nodes = $0 per hour (this is the magic of auto-scaling!)

Step 6.5: Configure Environment

Fourth cell:

# Use curated environment

curated_env = Environment.get(

workspace=ws,

name="AzureML-sklearn-0.24-ubuntu18.04-py37-cpu"

)

aml_config = RunConfiguration()

aml_config.target = compute_target

aml_config.environment = curated_env

print(" Environment configured")

Step 6.6: Define Pipeline Steps

Fifth cell:

# Step 1: Data Wrangling

step1 = PythonScriptStep(

name="Data Wrangling",

script_name='data_wrangling.py',

source_directory='./pipeline_scripts',

compute_target=compute_target,

arguments=['--input-data', 'diabetes.csv'],

runconfig=aml_config,

allow_reuse=False

)

# Step 2: Preprocessing

step2 = PythonScriptStep(

name="Preprocessing",

script_name='preprocessing.py',

source_directory='./pipeline_scripts',

compute_target=compute_target,

arguments=['--prep', 'wranggled.csv'],

runconfig=aml_config,

allow_reuse=False

)

# Step 3: Model Training

step3 = PythonScriptStep(

name="Model Training",

script_name='modeling.py',

source_directory='./pipeline_scripts',

compute_target=compute_target,

arguments=['--train', 'preprocessed.csv'],

runconfig=aml_config,

allow_reuse=False

)

# Create pipeline

pipeline = Pipeline(workspace=ws, steps=[step1, step2, step3])

print(" Pipeline created with 3 steps")

Step 6.7: Submit Pipeline

Sixth cell:

# Create experiment

experiment = Experiment(ws, "diabetes-training-pipeline")

# Submit

pipeline_run = experiment.submit(pipeline)

print(f" Pipeline submitted!")

print(f" Run ID: {pipeline_run.id}")

print(f" Portal URL: {pipeline_run.get_portal_url()}")

Step 6.8: Monitor Pipeline

Seventh cell:

# Wait for completion (takes 5-10 minutes)

# This will show real-time output from each step

try:

pipeline_run.wait_for_completion(show_output=True)

print("\n Pipeline complete!")

print(f"Status: {pipeline_run.get_status()}")

except Exception as e:

print("\n❌ Pipeline failed!")

print(f"Error: {str(e)}")

print("\n🔍 Let's check which step failed...")

print(f"\n👉 Click here to see details:")

print(f" {pipeline_run.get_portal_url()}")

Run all cells! The pipeline will execute and you’ll see output from each step.

Part 7: Registering the Best Model

After pipeline completes, register the best model.

Step 7.1: Download Best Model

Create new notebook 03_register_model.ipynb:

from azureml.core import Workspace, Datastore, Model

# Get workspace

ws = Workspace.from_config()

datastore = Datastore.get(ws, 'workspaceblobstore')

# Download best model

datastore.download(

target_path="./models",

prefix="model_estimator_500.pkl",

overwrite=True,

show_progress=True

)

print(" Model downloaded")

Step 7.2: Register Model

New cell:

# Register model

model = Model.register(

workspace=ws,

model_path="./models/model_estimator_500.pkl",

model_name="diabetes-classifier",

tags={

'algorithm': 'RandomForest',

'n_estimators': '500',

'f1-score': '0.76'

},

description="RandomForest (500 trees) for diabetes prediction"

)

print(f"✅ Model registered!")

print(f" Name: {model.name}")

print(f" Version: {model.version}")

Part 8: Deploying the Model

Step 8.1: Create score.py

- Click “+” → “Text File”

- Paste:

import joblib

import json

import numpy as np

import os

def init():

global model

model_path = os.path.join(os.getenv('AZUREML_MODEL_DIR'), 'model_estimator_500.pkl')

model = joblib.load(model_path)

print(" Model loaded")

def run(raw_data):

try:

data = json.loads(raw_data)['data']

data = np.array(data)

predictions = model.predict(data)

probabilities = model.predict_proba(data)

results = []

for i, pred in enumerate(predictions):

results.append({

"prediction": int(pred),

"diagnosis": "Diabetic" if pred == 1 else "Non-diabetic",

"confidence": float(max(probabilities[i]))

})

return {"results": results}

except Exception as e:

return {"error": str(e)}

- Save as:

score.py

Step 8.2: Deploy via Portal

- Go to Azure ML Studio

- Click “Models” → “diabetes-classifier”

- Click “Deploy” → “Deploy to real-time endpoint”

- Configure:

- Endpoint name:

diabetes-api - Compute type: Azure Container Instance

- CPU: 1

- Memory: 1 GB

- Endpoint name:

- Upload

score.py - Click “Deploy”

Wait 10-15 minutes.

Step 8.3: Test Endpoint

Once deployed, in a new notebook:

import requests

import json

# Get endpoint details from Azure ML Studio → Endpoints

endpoint_url = "YOUR_ENDPOINT_URL"

api_key = "YOUR_API_KEY"

test_data = {

"data": [[6, 148, 35, 33.6, 50, 1]]

}

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {api_key}'

}

response = requests.post(endpoint_url, json=test_data, headers=headers)

print(json.dumps(response.json(), indent=2))

Part 9: Clean Up

Stop Your Compute Instance

IMPORTANT: To avoid charges:

- Go to Compute → Compute instances

- Select your instance

- Click “Stop”

Or set auto-shutdown:

- Compute → select instance → “Edit”

- Enable “Idle shutdown”

- Set to 30 minutes

Conclusion

🎉 You did it! All in Azure ML Studio – no local setup needed!

What you accomplished: ✅ Created workspace ✅ Used Jupyter notebooks in the cloud ✅ Built automated ML pipeline ✅ Deployed REST API

Next steps:

- Integrate API in web/mobile apps

- Set up monitoring

- Try different algorithms

Leave a comment