AI adoption is accelerating with most models being trained, deployed and scaled in the cloud. While this enables innovation, it also introduces unique security challenges. These challenges range from protecting sensitive training data to defending against AI specific attacks.

Imagine your company is launching its first cloud based AI powered service. There will be incredible excitement around this technology, but from experience, I have seen the nervousness. To get over this, you need the right blueprint.

In today’s post, I will be talking about the real risks, the honest challenges and the practical best practices for securing AI in the cloud.

Risks

One of the biggest risks of running AI in the cloud is data exposure. Training datasets often contain sensitive personal or business information. If they are not encrypted or properly secured, they can easily be leaked or misused. A breach is not just a PR nightmare, it is a fundamental betrayal of trust.

Another growing risk is model theft or manipulation. Trained AI models represent valuable intellectual property. Attackers inject biased or incorrect data into your training pipeline. They steal your training data. Adversarial inputs can also be fed by attackers to cause errors in predictions.

Beyond that, infrastructure misconfigurations remain a significant threat to cloud workloads. This includes open storage buckets, overly permissive IAM roles or poorly secured Kubernetes clusters.

There is also the issue of cost exploitation. If an account is hijacked, attackers can spin up GPU-heavy workloads for crypto mining which can lead to huge bills.

Finally, organizations face the risk of compliance violations. Sensitive AI workloads must adhere to regulations like GDPR, HIPAA or SOC 2.

Challenges

Protecting massive datasets is not easy. AI needs large, diverse data for accuracy. Yet, privacy laws limit how that data can be stored and processed.

Detecting sophisticated attacks like adversarial inputs or model poisoning is challenging. These attacks are subtle. They are not always visible in traditional monitoring systems.

On top of this, AI workloads typically run across complex cloud environments. These environments include containers, GPUs and serverless pipelines. Each of these elements requires different security controls.

Regulatory compliance adds another layer of difficulty. AI innovation often moves faster than regulation. This creates gaps between what is possible and what is legally required.

Finally, cost visibility is a practical challenge. Monitoring and controlling the use of expensive compute resources in real time can be difficult. This is especially true when multiple teams or models are running at the same time.

Best Practices

Organisations need to protect data by enforcing encryption both at rest and in transit. They should apply anonymization techniques using least privilege access control and zero trust architecture.

Models themselves should also be secured with authentication at endpoints, traffic monitoring, adversarial training and watermarking to protect intellectual property.

On the infrastructure side, teams should harden their cloud environments by locking down IAM roles. They should regularly patch their infrastructures and teams should use monitoring tools like Azure Monitor, AWS GuardDuty or Azure Defender.

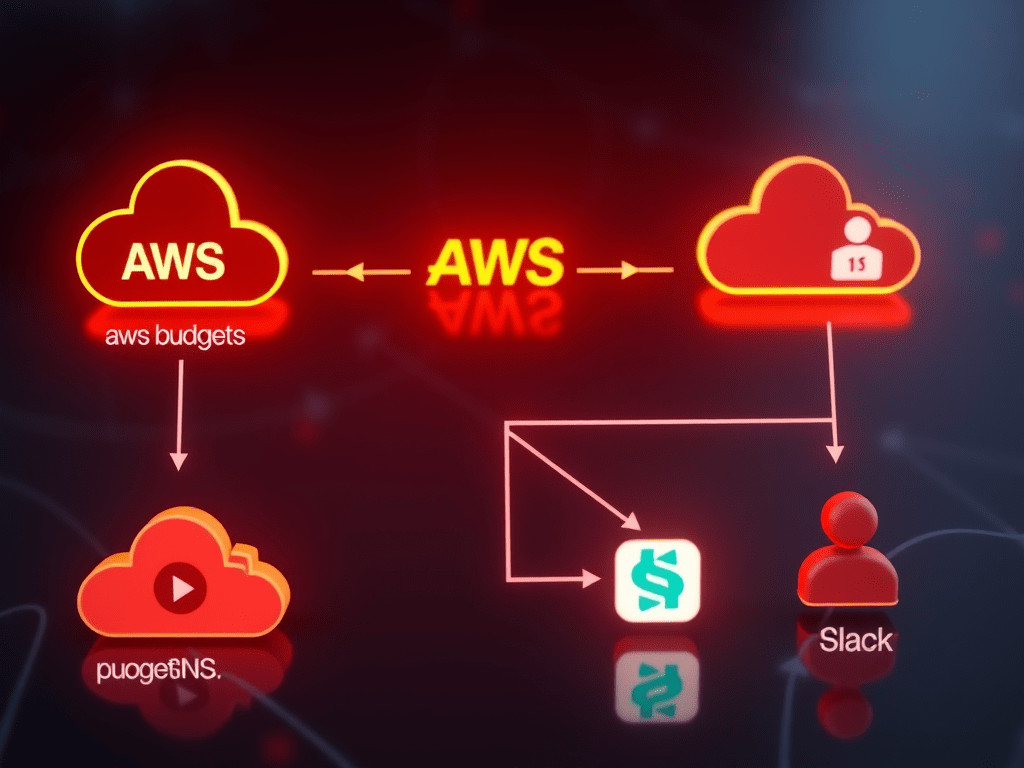

Cost-related risks can be mitigated by setting budgets and quotas. You can also set up alerts. It is also important to store API keys securely in secrets managers.

Finally, strong governance is essential. All activity should be logged with services like CloudTrail or Azure Monitor. Organizations should adopt frameworks such as the NIST AI Risk Management Framework.

Teams must document model purpose, limitations and data sources to support audits and compliance.

AI in the Cloud – Risks & Mitigation

1. Data Security & Privacy AI models need huge datasets, often with sensitive information. Breaches or leaks can be devastating.

* Encrypt training data at rest and in transit.

* Use secure data lakes with fine-grained IAM/RBAC controls.

* Apply anonymization and zero trust techniques.

2. Model Security Trained AI models are valuable intellectual property and can be targeted for theft or manipulation.

* Use access controls and monitoring around model endpoints.

* Regularly validate outputs against adversarial inputs.

* Employ model watermarking to protect IP.

3. Cloud Infrastructure Risks AI workloads rely on GPUs, containers and serverless pipelines. These can all be entry points if misconfigured.

* Apply least privilege to IAM roles and service accounts.

* Enable guardrails like AWS GuardDuty and Security Hub.

* Automate compliance with tools like AWS Config or Azure Policy.

4. Cost Exploitation Attackers hijack AI cloud resources for crypto mining or model abuse.

* Set budget and alerts and monitoring in CloudWatch/Cost Explorer.

* Use quotas and throttling to control resource use.

5. Compliance & Governance AI workloads often fall under stricter compliance (GDPR, HIPAA, SOC 2).

* Implement logging and audit trails with CloudTrail.

* Adopt AI-specific governance frameworks (NIST AI Risk Management).

Take the Bold Step

Moving your AI to the cloud does not have to be a leap of faith. It can be a strategic, secure and powerful step for your business. The key is to shift your mindset. Stop seeing security as a one time box to tick. View it as an ongoing conversation. Make it a core part of your AI culture.

I will suggest you start small, focus on your most critical assets and don’t be afraid to ask for help. The cloud providers themselves have extensive documentation and security well architected frameworks. And of course, consultants like me are here to help you navigate the path.

Please reach out if you need any help.

Appointment – Scopnatic Consulting

Thank you for reading..

Leave a comment